- done :)

https://abstractsportsviz.herokuapp.com/

- finalise presentation

- record everything (demo, presentation, trailer footage)

- last clean ups and deployment

- updated the about page

- prepare presentation

- Gravity Sensor: The gravity sensor measures the acceleration effect of Earth's gravity on the device enclosing the sensor. It is typically derived from the accelerometer, where other sensors (e.g. the magnetometer and the gyroscope) help to remove linear acceleration from the data.

- create product comps

- explanation window at the beginning + auto rotate

- clean up upload file (less textures..)

- resource for sensor data https://www.w3.org/TR/motion-sensors/#gravity-and-linear-acceleration

- swimming so simple sphere noise bc data recording stopped

- create still frames

- sound edit/design

- final beautification for each sport with color, shapes, textures.. (doable)

- boulder sound recording/ design (& swim/skate)

- heroku push

- create better narrative/connection for sports to viz (with text and sound

- show hide info text works

- started with sound design in abelton live 10 with granular synthesis should layer more noise, add delay,

- test deployment (sound starts with sound button.. maybe add start button at beginning for this)

- presentation topics:

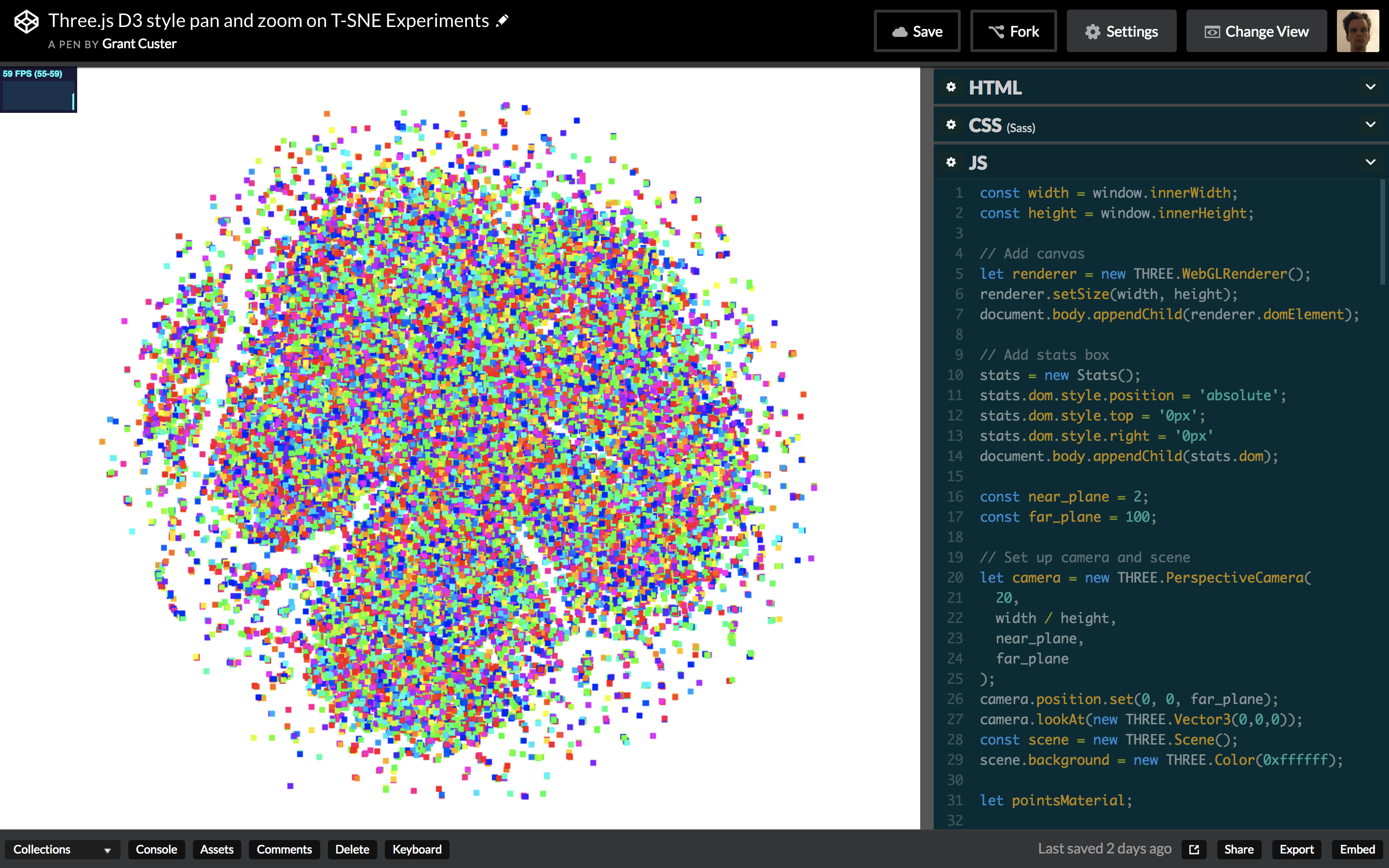

- technical developement: find balance between visual style and performance, compromise

- visual developement:

- picture of coral & other vis elements -> combine various shapes

- meshline from example

- speherNoise from mood

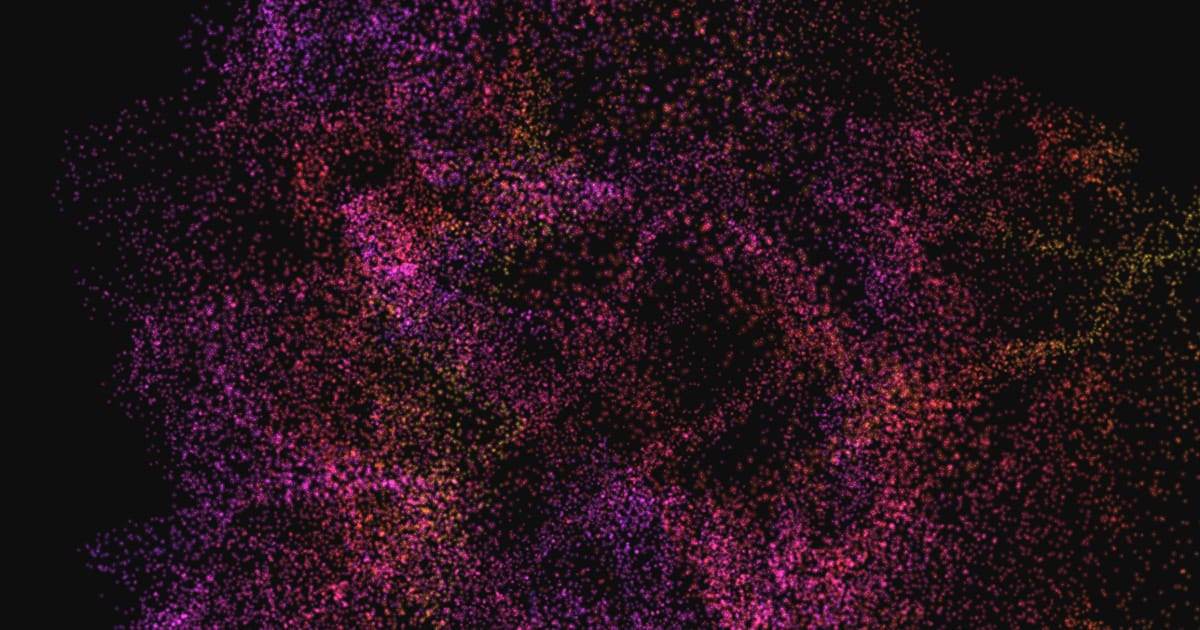

- particles from beginning csv file (inspo chalk explosion)

- sound vis from sound example - liked mesh

- narrative of data

- switch between sounds works now - had to stop audio before switching

- sound stops when sound not visible

- improve sound animation (doable) Links: medium article, codepen, webaudio sandbox, other example

- first edit boulder sound file

- if (previous data point - current data point > some value) show (less too close data points, more structured & better perfomance in fps)

387 data points (every point above certain distance to previous point) vs. 673 data points (every 12th data point)

- update light orientation

- downloaded all js scripts on index.html

- sound edit swimming file

- testing sound switch (not working yet)

-

fix GUI functionality (doable) - It's possible to switch between sports now :)

- remove() function was the trick to blend out 3D Objects source

-

restructure code.. for gui - every sport has a 'sport'Vis() function

-

improve perfomance for smoother animation - depends on sphereNoise object amount mostly

- Solution: less spheres, no default animation (can be turned on in gui), adjusting amount of data points per sport per visualisation style

-

improve perfomance for smoother animation - add() and remove() instead of sportViz() in render()

-

fixed some console errors

-

image capture button: https://codepen.io/shivasaxena/pen/QEzAAv ,with this: https://jsfiddle.net/2pha/art388yv/

-

added description of data: The gravity sensor provides a three dimensional vector indicating the direction and magnitude of gravity. Typically, this sensor is used to determine the device's relative orientation in space..

-

Meshline links: git, demo, splinecurve, three constan.spline

- more research on switching between sports

- fixed one csv bug line in pointCloud

- color adjustment with sport change (should add material/ updateNoise function for every sport - swimming more reflective..)

- gui functionality: blend in/out data sets (except gravity)

- line animation

- improved performance (less sphereNoise data points, starting without animtion)

- created one summary csv of sports

-

check in with lena - suggestions:

-

line animation

-

more abstract sound design

-

pulsing light

-

image capture button Links: https://codepen.io/shivasaxena/pen/QEzAAv , https://jsfiddle.net/2pha/art388yv/

-

important to show creative/technical process in final presentation (reflect on it..)

- The gravity sensor provides a three dimensional vector indicating the direction and magnitude of gravity. Typically, this sensor is used to determine the device's relative orientation in space.

- should add description of data

- clean up code

- check in summary https://github.com/aeschi/Orientation_Project/master/currentState.md

- work on gui

Bouldering

Swimming

Skating

GUI Interaction (not properly working yet)

Demo

- gradient/climbing grip texture for noiseSpheres (possible adjust color with .color, but not the opacity)

- screenshots/recording for check in

- audio implementation (animation influenced by sound?)

- window resize

- audio implementation (doesnt work yet)

- animation & composition

- color palette

- light/environment adjustments

- add shadow to plane

- points color (defaut white for all points)

- idea: morphing 3d sports objects to acceleration data tutorial, and this morphing shoe code

- exchanged orbit controls (zoom function)

- bit of clean up

- test lineMesh shadow (not really working..)

- star shaped meshline

- more visual testing

- should try out: use MeshLambertMaterial, set envMaps to reflective

- can I use buffer geometry?

- half bals with phiStart etc..

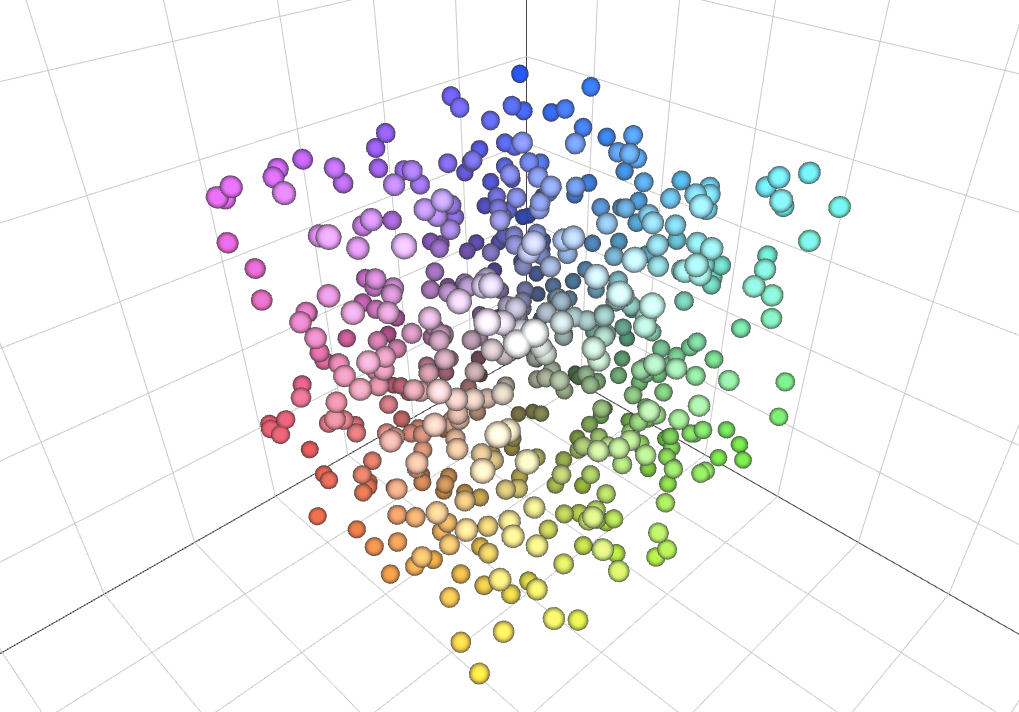

- some shape tests with material, noise, color, amount of points:

- editing some photos

- transfering and sorting recorded data

- recorded skating with roman

- editing some photos

- added line animation

- point cloud explosion (animation)

- general animation testing

- should add:

- sound design (bouldering: hands smearing & clap, swimming: splash, cycling: kette, bremsen, skating: street pop sound, ice skating: ice)

- spherenoise 3d object back into scene for more 3dish style

- color code (bouldering: white chalk point cloud, swimming: blue water splash particles)

- mores research on animation with KeyFrames & Quaternion & AnimationClip

- https://threejs.org/examples/?q=misc#misc_animation_keys

- https://stackoverflow.com/questions/40434314/threejs-animationclip-example-needed

- parsing-and-updating-object-position-for-animation-in-three-js

- https://threejs.org/docs/index.html#api/en/math/Quaternion

- https://threejs.org/docs/index.html#api/en/animation/AnimationClip

- https://threejs.org/examples/jsm/animation/AnimationClipCreator.js

- Line Animations

- https://discourse.threejs.org/t/line-animations/6279

- https://discourse.threejs.org/t/drawing-with-three-linedashedmaterial/3473?u=prisoner849

- https://threejs.org/docs/#manual/en/introduction/How-to-update-things

- recording boulder session

- saving data and taking a first look

- more research about animation

Next steps:

-

try out noise distortion with all points for animation

-

add slight rotation of all elements

-

switch between data sets with gui

-

added gui and stats to working file

-

possible data from apple watch sensorLog

- more light testing:

-

perfomance tests:

-

animation tests:

- csv integrated (back to d3 version 3)

- access to rows fixed :)

- added animated cube

- mouse orientation with zoom

- creating new basic three js scene

- with lighting and endless space/horizon/fog inspo

- with gui -> need to combine with dataviz and integrate csv file not upload & try shader

- added rotation to 3D objects (spheres)

- bundled sphere objects into Object3D Group

- adding more lights to scene (hemispheric, spotlight,..)

- check for app that records sound in background (AVR App + decibel in SensorLog)

- Audio Visualisations:

- used data set csv with several sensors and pulled different data with unfiltered, lowPass arrays..

- Problem: need to change position of datasets so they're at the same "origin/location" in space

- added map function for color gradient (slows it down quite a bit..?)

-

check for app that records sound in background (AVR App + decibel in SensorLog) Music visualization with Web Audio and three.js

-

write down exact protocoll for how to record data - and while recording (on tuesday)

-

decide on swimming (I guess I can just record it..)

-

try creating an mesh array so it can be animated

-

go through animate function & try to animate data with sound or heart rate or other data set

-

add scroll function

-

add GUI (blend in/out data sets)

-

use data set csv with several sensors and pull different data with unfiltered, lowPass arrays.. (rename them)

-

color scheme for 3d objects

-

maybe try one 3d object created out of data points as vertices

-

mix 2/3D look

-

integrate csv file load in js file

-

added 3d object (cubes)

- better understanding of d3 (how data is read) and animate function

- added time aspect to each data point with let x = xScale(data.unfiltered[i].x + i * 0.0005); should depend on range of dataset

- connected data points with lines (only x coordniate = smooth line, x,y,z = more jagged line)

- testing apple watch/iphone data from sensorLog and heartgraph (motion pitch, roll, yaw looks nice)

- should use high Hz rate for more data points = better visuals

- Camera: Sony, iPhone, Hasselblad/ Nikon

- Backup microphone (from camera?)

- Sports: Bouldering, Skating, Swimming, Ice Skating, Cycling

- iPhone/ Apple Watch

- Sensory data from

- iPhone: accelerometer, gyroscope, magnetometer

- Apple Watch: accelerometer, gyroscope (rotation)

- adjust time table

- created website with 'About', 'Home' & 'Compare Sports' routes

- ABOUT page with some basic information

- HOME page with slideshow of visualizations/athletes

- COMPARE SPORTS page with centered Three.js canvas

- decided on primary sports: bouldering, swimming, skating

- secondary: running, cycling, yoga

- Test Athlete Portrait + Visual:

- smaller particle size works better (maybe random between 0.5 - 1.5 or influenced by another factor like sound)

- mirroring the data looks kinda cool

- random functions in js:

function getRandomFloat(min, max) {

return Math.random() * (max - min) + min;

}

first OK visual output with small particles

Change particles by geometry or by material?

- adjusting shapes, color, input for 3D Scatterplot example

- First Look: Using Three.js for 2D Data Visualization

- Update: Using three.js for 2D data visualization

- Understanding scale and the three.js perspective camera

- p5.js oder three.js

- need to check where its easier to load csv files

- three & d3: 3D Scatterplot with csv upload

- example plot with csv upload

- audio/pulse as csv file?

- introduction to data loading

- create styleframes

- gradient shader in threejs

- gradient colors in threejs

- REACt für interaktive Sachen (public "bleibt eher unverändert", src: hier wird editiert)

- Props: Infos werden in Baumstruktur durchgereicht

- State: Variablen wo sich Werte ändern können (count++ oder + - button)

- index.html -> index.js -> app.js ...

- mocap library mixamo adobe

- import for packages: npm install ..

- import * as XY / {KonkreterName}/ DefaultExport from 'jasonFileName';

-

importing scripts offline

-

learn about bundeling modules (browserify/ webpack)

- in project file (three.js) npm init

- npm install webpack --save-dev (only dev file not for production)

-

codepen

- three.js documentation getting started imgage path - where am I startin the server from (three folder)

- effects anaglyph - three.js

- HDRI as environment like in effects anaglyph - Sports environment as connection to sport

- Glitch look

- research for prerecorded mocap data, sound data, pulse,..

- test out visualisation in p5.js, three.js, only 3 joints (gradient look?)

- (search for tracking devices)

I am a student in the Creative Technologies master program at the film university Babelsberg near Berlin. Currently, I am working on a project with abstract visualisation of sports movements and I would love to collaborate with you.

Sports are goal and performance oriented, and we often forget about the beauty and poetic perspective of these movements. I want to capture the essence of these motions, which are mastered for perfection and sensationalise them through abstract art.

Here is a short description of my project: The idea is to generate a visualisation of several sport disciplines by recording specific data. This will mainly be data sources such as motion tracking, but also non-visual factors like sound and pulse of the athletes. Possible sports could be climbing, skating, dancing, cycling and others. The captured data will then be processed with javascript, to enable an artistic visualisation. This will be done with the help of libraries such as P5.js, three.js or babylon.js. The output will be a combination of data-driven movement as well as artistic choices to achieve a visually appealing animation. After completion of the project, the viewer will be able to interact with the visual output on a web based platform.

The Xsens technology seems to be perfect for capturing movement in various environments, which would not be possible on a conventional motion capture stage. Would it be possible to get support for my project from Xsens by lending me the MVN Animate Motion Capture System a few weeks during January or February? I would very much appreciate any kind of help and of course credit the support of Xsens for my project.

Please let me know if you have any questions about my project or if an official statement by my professor is needed.

Machine Learning - Human Pose Estimation [bodypixel - person segementation]https://medium.com/tensorflow/introducing-bodypix-real-time-person-segmentation-in-the-browser-with-tensorflow-js-f1948126c2a0

Footageanalyse + rotation, tilt, location from iphone? + pulse + sound

Three.js is a cross-browser JavaScript library and Application Programming Interface used to create and display animated 3D computer graphics in a web browser. Three.js uses WebGL

- interactive part: viewer can guess sport

- still images -> mix of athletes/face and viz

- concept finalisation

- moodboard

- research for prerecorded mocap data, sound data, pulse,..

- test out visualisation in p5.js

- search for tracking devices

- create workflow

- test out visualisation in p5.js

- style testing

- search for tracking devices

- decide on visual style

- recording the data input myself

- platform/website for final product

- polishing animation

- interaction with visualisation

- beautification of input features

- still frames from animation for product design

- preperation for presentation

Finish 🎊

- Circle color gradient

- xsens need to contact them, maybe bought by university

- mobile devices

- Kais Motion Tracking Suit

- High end look, prerendered (Houdini, C4d, Maya) houdini tutorial OR

- web based, interactive (P5.js, D3.js, RAW Graph,..)

- visually high end look

- nice (fluid) animation

- interaction

- still frames for branding

- recording data myself

- multiple sport disciplines

- visually 'ok'

- no animation

- no interaction

- one sport (movement)

- using prerecorded data

Indepent from my work would be the sound design (maybe filmmusic student/friends)

I created a moodboard on pinterest

folder with moods

folder with moods

I really like the gradient, soft glow, steamy look like here: